Scripting discovery of random YouTube videos

(I just discovered this post as unpublished draft from about three years ago. No idea why I didn't publish it. The method described in it still works. All the code is bash script. There's a comment in the last bit of code "needs more refining" which I leave as an exercise for those so inclined.)

Do you now, or have you ever wanted to, script discovery of random YouTube videos? I did recently and couldn't find anything useful online. So I made up my own method.

If you're thinking YouTube videos are identified by 11 character strings so you can generate a random 11 character string and use that, you're not technically wrong, but it's not the way to go about it. As a test I generated 1000 and none of them were were valid. This isn't at all surprising given how many possible values those 11 characters provide. In my observation, each character in can be an lower or uppercase letter a number, or a -. That's 63 possible characters. A calculator tells me that 63^11 is 62050608388552830000. (If you want to say that out loud, say "sixty-two quintillion", then mumble a bit.)

function getVideoID {

local id="";

while [ "${id}" = "" ];do

id=$(curl -s https://www.youtube.com/results?search_query=$( < /dev/urandom tr -dc A-Za-z-0-9 | head -c4) | grep -o 'watch?v=[a-zA-Z0-9]\{11\}' | sort | uniq | sort -R | head -1);

done

echo "${id/watch?v=/}";

}

That gets you a valid id, such as dQw4w9WgXcQ. If you discover videos entirely at random some of what you find will be NSFW. Really. It will be. The method I use to filter out NSFW content uses youtube-dl

function getVideoUrl {

local url="";

url=$(./youtube-dl --age-limit 0 --get-url "${1}");

echo "${url}";

}

videoID=$(getVideoID);

videoUrl=$(getVideoUrl "${videoID}");

If ${videoUrl} is not zero length then, in my experience at least, the video is SFW and it's value is an url of the raw video which could be used as input value for ffmpeg or whatever. (To emphasis, it is *my experience* that this method filters out NSFW content.) If you just want to download the whole video, youtube-dl can do that for you. (youtube-dl will find the highest quality version of the video by default. You may want to change that depending on your available bandwidth or what you intend to do with the video.)

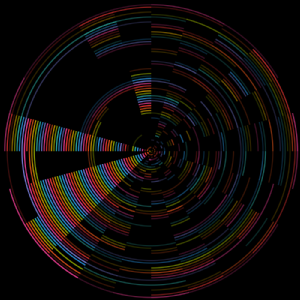

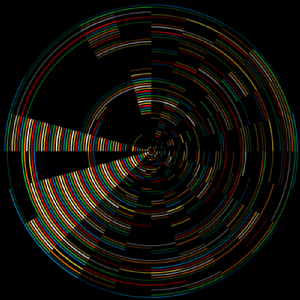

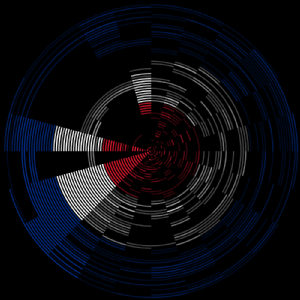

Some videos on YouTube have a video component that is just a static image. E.g. someone's ripped an album and then combined the audio with the album cover art to create something that can be uploaded to YouTube. Such videos are visually uninteresting and maybe you want to identify those videos and discard them rather than use them in whatever it is you're doing that involves random YouTube videos. I did, so I worked out a way of doing that too. The method I've used is to generate a bunch of images from the video, then compare them in a way which gets a value that represents how much the images differ by. If that value is less than a certain value, discard it. I've used GraphicsMagick for comparing the images. ImageMagick can be used to but is slower. (The less powerful your hardware, the bigger the speed difference is. ImageMagick output is slightly different to GraphicsMagick so you can't just remove the "gm", the awk and cut arguments would need changing.) To extract the images you obviously first have to download the video and in the below the downloaded video is theVideo.mp4

# generate an image at 2 second intervals

ffmpeg -loglevel fatal -i theVideo.mp4 -vf fps=1/2 -y foo__%02d.jpg

if [ $? -eq 0 ];then

# get an integer value that represents how different the images all are to each other

v=$(gm compare -metric MAE foo__*.jpg null:- | grep Total | awk '{print $2}' | cut -d . -f 2);

if [ ! -z "${v}" -a "${v:0:2}" != "00" -a "${v:0:2}" != "01" ];then **** needs more refining 018 019 OK 010 not OK maybe test 3rd char too

# the video isn't a static image

# do whatever it is you want to do with it

fi

fi

I arrived at discarding videos where the first two characters of v are 00 after calculating v for a bunch of videos.

Mike Willis

Mike Willis

Please wait - comments are loading

Please wait - comments are loading