Here is a trivial fact about the Poisson point process, which I find curious because it links directly to a concept from modern algebra (the notion of a free abelian group).

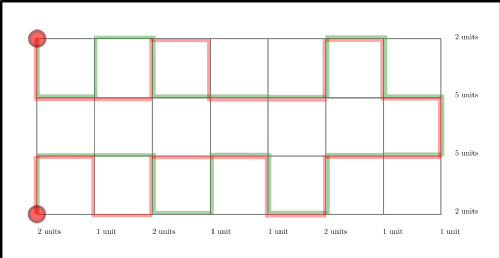

Recall that a Poisson point process on the real line can be viewed as a (locally finite) random pattern of points. We can define it in terms of its consecutive inter-point spacing lengths, Zn for n running through the integers. If Z0 corresponds to the spacing containing the origin, then the Zn are independent, with all but Z0 being of unit Exponential distribution and Z0 being of Exponential distribution of mean 2. (The discrepant behaviour of the Z0 distribution is due to size-biasing, and is associated with the famous Waiting Time Paradox.)

Suppose I secretly select finitely many different spacings, Zi1 , Zi2 , ..., Zik, and tell you only the total length of the spacings I have selected, namely z = Zi1 + Zi2 +...+ Zik.

If you are allowed to inspect closely the relevant realization of the unit-rate Poisson point process, then knowledge of the total length z is all you need to determine which are the spacings i1 < i2 < ... < ik that have been selected.

Indication of proof: the Zi's are independent with Exponential distributions (unit rate except for i=0, for which the rate is 2). But this means that almost surely the spacing lengths Zi generate a free abelian group under addition. In particular, almost surely

(Zi1 + Zi2 + ... + Zik) - (Zj1 + Zj2 + ... + Zjr)

must be non-zero unless the sequences i1 < i2 < ... < ik and j1 < j2 < ... < jr are identical. So (since there are only countably many finite integer-coefficient linear combinations) almost surely z = Zi1 + Zi2 +...+ Zik determines the sequence i1 < i2 < ... < ik.

End of proof.

Notes:

- Free abelian groups are defined in the Encyclopaedia of Mathematics. I learnt about these in my second undergraduate year and then never thought about them again till I noticed this phenomenon just recently.

- So is the notion of a Poisson (point) process.

- For a child-friendly explanation of the Waiting Time paradox, see Masuda N and Porter MA (2021) The Waiting-Time Paradox. Frontiers for Young Minds 8:582433. DOI: 10.3389/frym.2020.582433.

- The result generalizes easily to stationary renewal processes for which the inter-point spacing has a probability density.

- I came across this trivial fact while working on generalizing my paper on random lines and metric spaces (Kendall, W. S. (2017). From random lines to metric spaces. Annals of Probability, 45(1), 469–517. https://doi.org/10.1214/14-AOP935); the Poisson process result is noted there (without the modern algebra adornments) in the process of proving that planar line-pattern-based geodesics between prescribed points are almost surely unique ...

Wilfrid Kendall

Wilfrid Kendall

Please wait - comments are loading

Please wait - comments are loading

It is not unheard-of for Stats MSc students to find their MSc dissertation leading to a publication, but rather less common for the publication to be a component of a book on early medieval history! But that's what happened to Clair Barnes' MSc dissertation ("Statistics in Anglo-Saxon Archaeology", Department of Statistics, Warwick, 2015); you can read all about it in:

It is not unheard-of for Stats MSc students to find their MSc dissertation leading to a publication, but rather less common for the publication to be a component of a book on early medieval history! But that's what happened to Clair Barnes' MSc dissertation ("Statistics in Anglo-Saxon Archaeology", Department of Statistics, Warwick, 2015); you can read all about it in:

Loading…

Loading…